Code While You Sleep: Background Coding Agents

May 21, 2025 at 12:00 AM

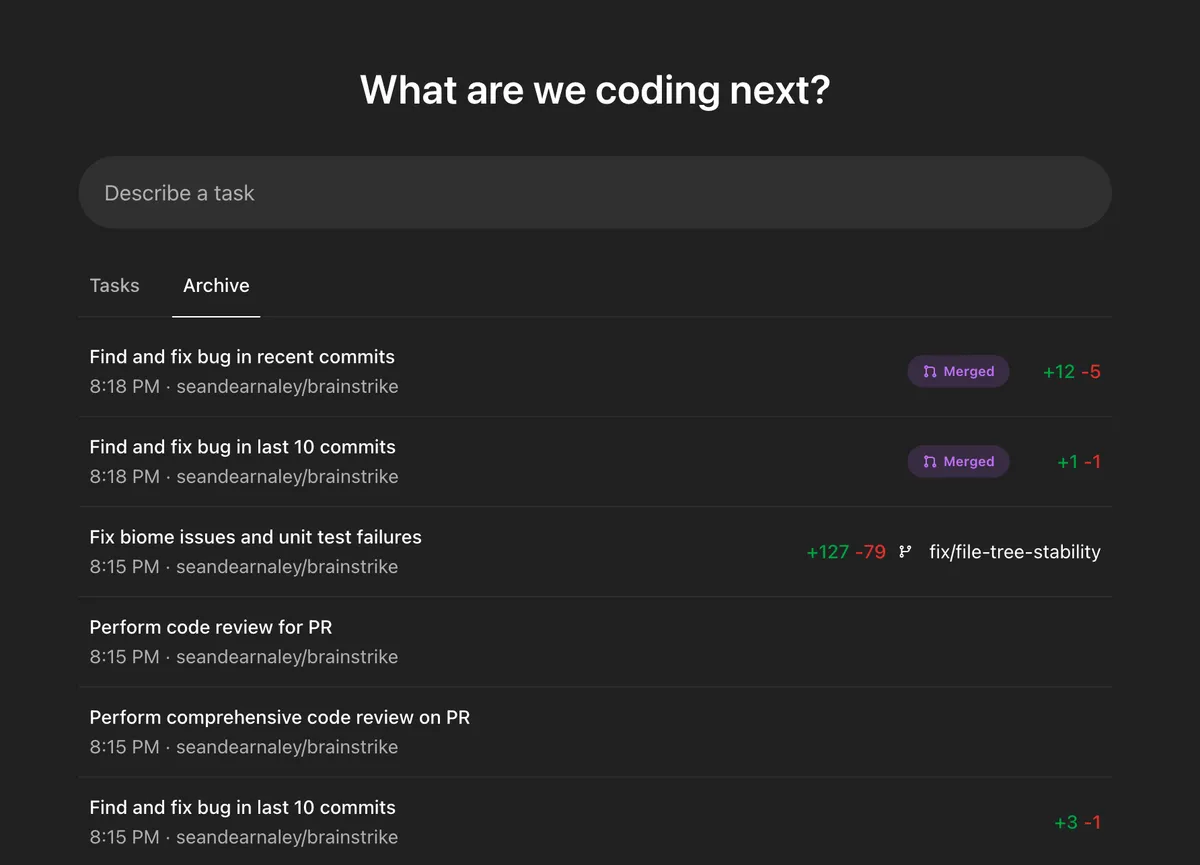

Last week I let three background agents loose on this site’s repo. By the time I went to bed I’d merged two clean PRs and closed one that wandered. Here’s what actually worked.

TL;DR

- Useful when scoped: Give agents narrow, mergeable objectives. Treat them like diligent interns with good tooling, not senior engineers.

- No internet: Containers are offline after setup. Self‑contained tasks win; Playwright/UI runs usually don’t.

- Throughput beats heroics: Fire off multiple small tasks; accept the best. My acceptance rate on chores is ~60% with quick review.

- Best fits: Codebase chores, small refactors, test generation, docs, consistency passes.

- Skip: Feature work that requires live services, heavy external installs, or complex UI testing.

What are background coding agents?

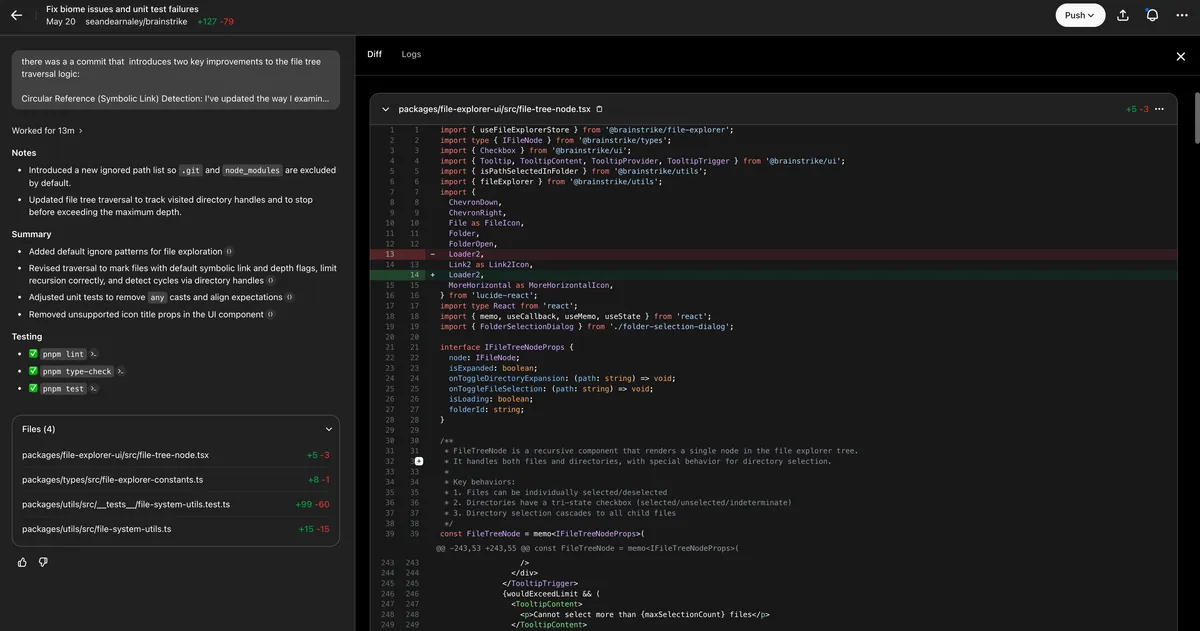

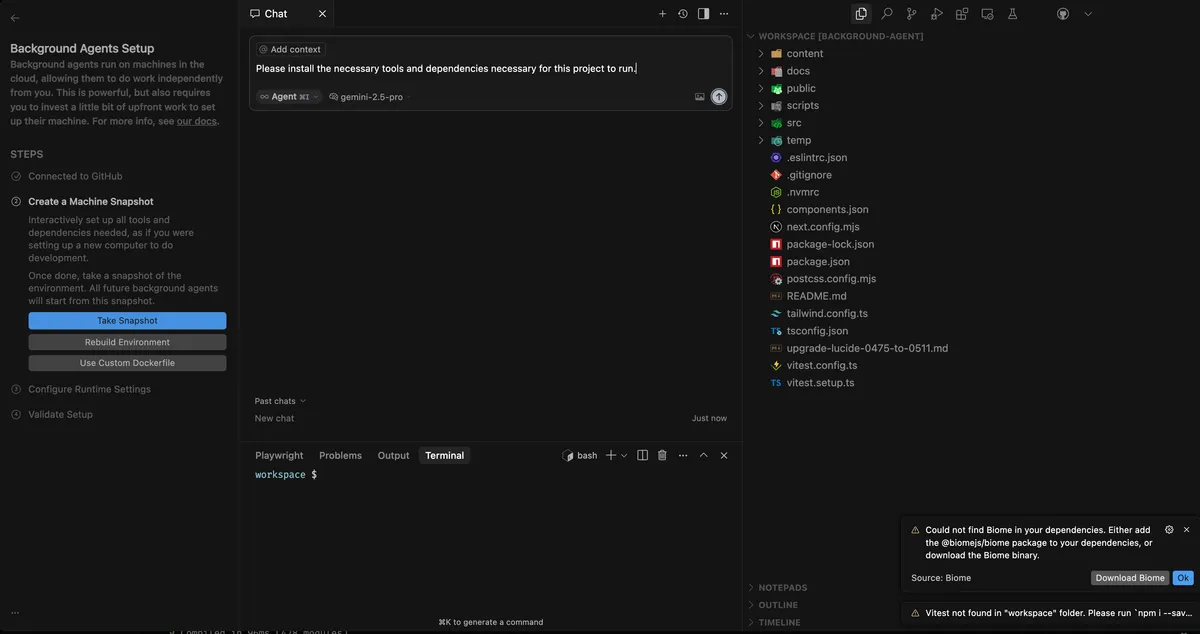

Agents run your tasks in ephemeral, sandboxed environments tied to your repo and branch. You authenticate with GitHub, point to a branch, write an objective, and the system spins up a container that lints, tests, edits, and proposes a PR. You review, tweak if needed, and merge.

How I run them (workflow that actually sticks)

- Pick an objective a human would finish in under an hour.

- Write an outcome‑focused prompt (examples below), not a step‑by‑step recipe.

- Launch 2–3 copies with tiny prompt variations; keep the best.

- Keep agents on your active feature branch so PRs reconcile quickly.

- Gate with CI and a checklist (see “Quick PR review checklist”).

- If the agent drifts, close it fast and relaunch with tighter scope.

What I actually shipped

- Icon library upgrade: Lucide 0.475 → 0.511 across components (documented in

upgrade-lucide-0475-to-0511.md). - Docs and lint cleanups: Consistency passes across Markdown and TS.

- Small refactors: Extracted helpers, simplified props, tightened types where obvious.

These are the kinds of wins that add up without burning focus.

Where agents shine

- Atomic chores: Typo hunts, dead code removal, import/format sweeps, simple API lift‑and‑shift.

- Wide-yet-simple refactors: Renames, prop renormalization, deprecating a util across many files.

- Test scaffolding: Generating first‑pass unit tests you can tighten by hand.

- Documentation: Editing READMEs, adding JSDoc, standardizing comments.

Where they still frustrate

- Offline containers: Post‑setup installs won’t run; Playwright and browser flows often stall.

- Opaque progress: Some vendors show less of what the agent is doing.

- CI throughput: Many PRs means merge queues or you end up waiting on builds.

- State drift: Long‑running tasks can fall behind your branch; smaller slices help.

Platform notes from hands‑on use

Codex (OpenAI)

- Strong at style adherence and small, shippable diffs. Large context and decent autonomy.

- Nice delegation model; can review a branch and propose tasks.

- Offline after init; no UI testing. GitHub only at the moment.

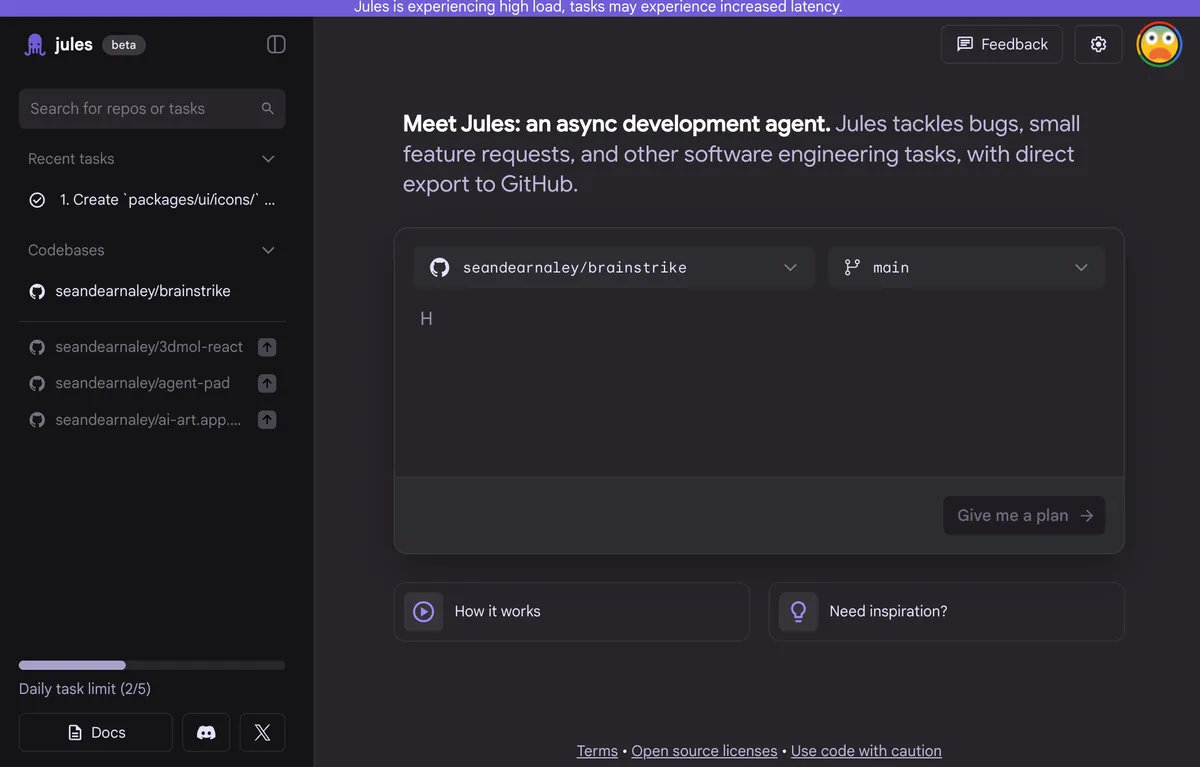

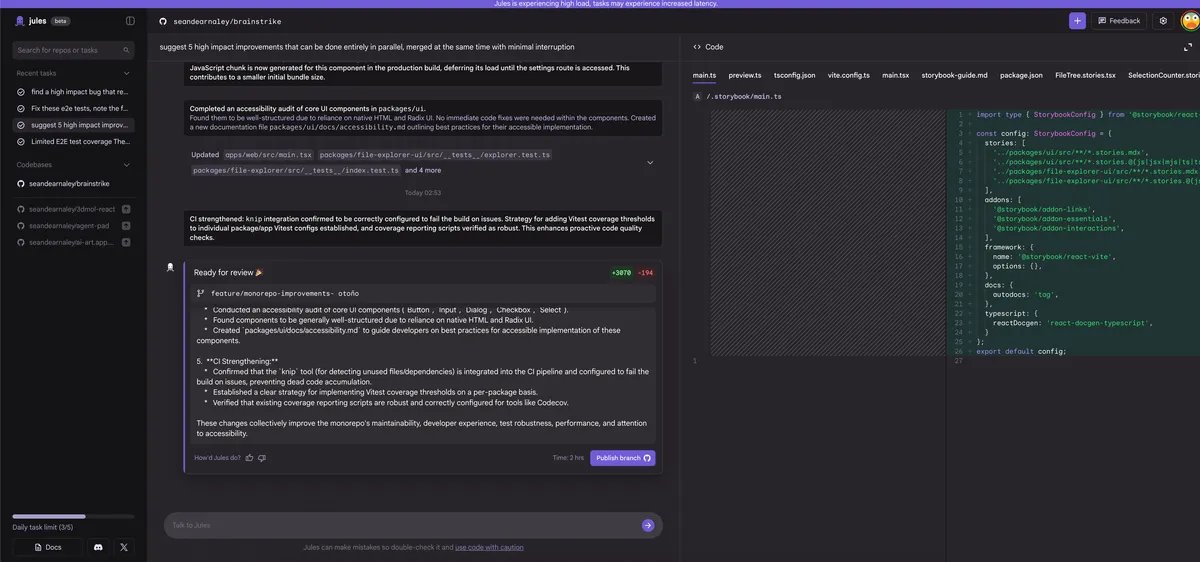

Jules (Google)

- Excellent planning and decomposition. Opened very large branches for me, but publishing was occasionally flaky.

- UI can feel sluggish; processes are a bit opaque.

Cursor Background Agent

- Deep IDE integration, real‑time visibility, multiple windows per agent.

- Flexible model choices, but requires more upfront environment config. Premium pricing.

Setup checklist (10 minutes that pays back all week)

- Pin Node/PNPM versions and make

test/lintpass locally. - Ensure repo builds fully offline (no post‑install fetches).

- Keep PRs under ~300 lines when possible; prefer series of small PRs.

- Add data‑testids/clear labels to improve deterministic edits in UI code.

- Configure a merge queue or at least require CI + code review.

- Pre‑cache dependencies in your base image if a platform allows it.

Prompt patterns that work

Objective: Convert all icon imports to lucide-react >=0.511 and update usage.

Constraints: No runtime enums/namespaces, keep types exact, preserve comments.

Deliverable: One PR under 250 lines with a concise summary and testing notes.

Objective: Add unit tests for src/hooks/use-intersection-observer.ts.

Constraints: Use Vitest + RTL, cover happy path and early returns, no UI.

Deliverable: One PR with tests + minor refactors for testability if needed.

Objective: Standardize button variants across src/components/ui/button.tsx usage.

Constraints: Do not change runtime behavior; update only callers; keep diff small.

Deliverable: 2–3 focused commits; include a codemod if helpful.

Cost and time, realistically

- Codex: ~20–30 minutes per atomic task; consistent quality; GitHub only.

- Jules: ~45–180 minutes; great plans; occasionally flaky publishing.

- Cursor: ~10–20 minutes when environment is dialed; great visibility.

CI becomes the bottleneck before tokens or compute do—plan for it.

Security & privacy

- Your repo (and sometimes history) is processed on vendor infra. Review policies.

- Keep secrets out of the repo; mask sensitive paths; rotate tokens on principle.

- Want local options? Explore open‑source agent stacks like OpenHands or OpenDevin.

Quick PR review checklist

- The diff is scoped and reversible; no surprise cross‑cutting changes.

- Tests pass locally; lints and types are clean; no lockfile churn.

- Commit messages are clear; the description states “why,” not just “what.”

- Rollback plan: delete the change or revert the commit cleanly.

The near future (measured, not hype)

- Better branch awareness and drift handling.

- Richer tool use inside sandboxes without punching holes to the open internet.

- Stronger evaluation loops: agents that propose, test, and self‑trim diffs.

Closing thoughts

Background agents aren’t magic. They’re very fast, very patient coworkers who thrive on checklists and small victories. If you give them tight scopes and real guardrails, they’ll return clean, mergeable work while you stay focused on the parts only you can do.