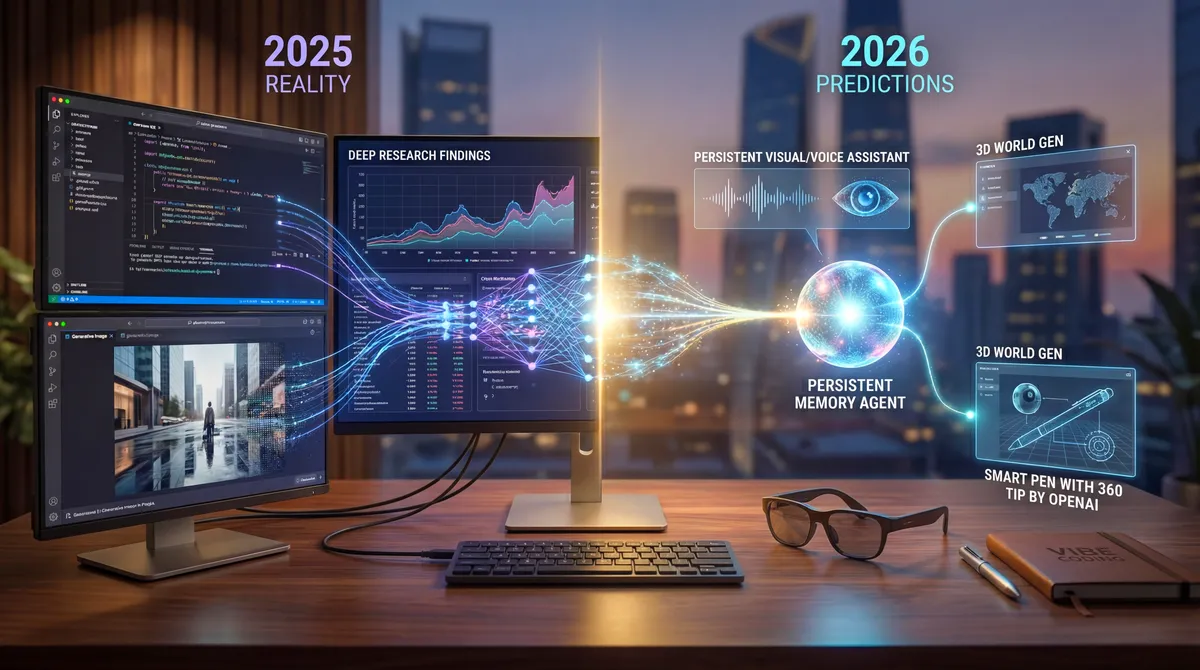

2025 Reality / 2026 Predictions

January 1, 2026 at 9:00 PM

2025 Reality / 2026 Predictions

A Year of Refinement Becomes a Year of Reality

January 2026

A year ago, I made my 2025 predictions wondering if AI coding tools would reshape workflows, if agents would finally become useful, and whether the big labs would keep the momentum going. Let's see how I did—and what I think is coming next.

Part One: How My 2025 Predictions Held Up

AI IDE Boom: Nailed It

I predicted that Cursor, Windsurf, and GitHub Copilot would gain serious traction and reshape workflows. That turned out to be an understatement.

Cursor's growth wasn't just "vibes"—it became one of the defining stories of the year. Copilot closed the feature gap, but Cursor pulled even further ahead. And Windsurf? That story got complicated in a way I didn't anticipate.

Fact Box: Cursor's 2025 Growth

Anysphere (Cursor's parent company) hit a $29.3 billion valuation in their November 2025 Series D, raising $2.3 billion. Annualized revenue exceeded $1 billion, with enterprise revenue growing 100x during 2025. Both NVIDIA and Google became investors.

Sources: TechCrunch, The Information

Windsurf didn't get acquired by Google in the traditional sense. What actually happened was more interesting: Google struck a $2.4 billion deal that was part licensing agreement, part talent acquisition. About $1.2 billion went to investors and $1.2 billion in compensation for roughly 40 employees who joined Google DeepMind. The remaining Windsurf company was then acquired by Cognition (the makers of Devin) for around $250 million.

Around the same time, Google launched Antigravity, their new agent-first coding IDE built for Gemini 3. Whether Windsurf's technology directly powered Antigravity hasn't been officially confirmed, but the timing is suggestive.

Fact Box: The Windsurf Deal Structure

- July 2025: OpenAI's reported acquisition talks with Windsurf fell apart

- July 2025: Google's licensing + talent deal ($2.4B)

- Late 2025: Cognition acquired the remaining Windsurf business (~$250M)

- November 2025: Google launched Antigravity

Sources: Reuters, iTechs Online

What really surprised me was the shift in attitude. At the start of 2025, people were literally laughing at the idea that AI coding would take over in any meaningful capacity. Mark Zuckerberg and Dario Amodei at Anthropic were making predictions about how much code would be AI-written, and skeptics rolled their eyes.

By the end of the year, even the skeptics were using these tools themselves. That's really saying something.

Fact Box: AI-Written Code Statistics

There's no credible global measurement for "% of all code written," but company-level claims tell the story:

- Microsoft: 20-30% of code in some projects is AI-generated (Satya Nadella, April 2025)

- Google: >30% of new code is AI-generated and accepted (Sundar Pichai)

- Meta: ~50% of development work (Mark Zuckerberg, LlamaCon April 2025)

- Anthropic: Dario Amodei predicted AI would write 90% of code within 6 months (March 2025)—controversial, but he later claimed this materialized at Anthropic

- GitHub: 15 million developers using Copilot; studies show 46% code acceptance rates when enabled

Sources: CNBC, Second Talent Statistics, Index.dev

Agent coding in particular got really good, and it has a flywheel that'll continue into 2026. You could argue that some models are now just pushing weights around, and their intelligence level is adequate for most software development tasks. But it's still a human in the loop, and a lot of engineers have had to come to terms with developing entirely new skill sets.

The job displacement question has been interesting. Juniors have been affected to some extent—if you're at the bottom of the productivity stack in a competitive environment, you're not going to do well. The differentiator is adoption: people who embraced these tools have done dramatically better than people who dismissed them based on a tweet they read. If you're still skeptical, I'd encourage you to actually try them rather than form opinions from social media takes.

Agents: The Long Game

I talked about models having agency to complete tasks with tools and memory. A year ago, we were just getting "agent-like capabilities." Now it feels like agents are everywhere.

But I agree with Andrej Karpathy's take: this wasn't really "the year of agents." His argument is that building truly useful agents is more of a decade-long project than a single-year headline. Agents are here to stay—they're just a thing now—but who knows how long until they become genuinely autonomous and reliable.

Fact Box: Karpathy on Agents

When OpenAI COO Brad Lightcap called 2025 "the year of agents," Karpathy responded by proposing "the decade of agents (2025-2035)" instead. His argument: current agents are impressive but still lack sufficient intelligence, multimodality, and continual learning for true autonomy.

Source: Dwarkesh Podcast, OfficeChai

The general idea of the machine learning flywheel—where we build models to extend the frontier of tooling to build the next model—just keeps paying dividends. It's the culmination of everything scaling together: dial up engineering, it gets better; dial up research, it gets better; dial up hardware, it gets better. Every axis is covered. That's a good candidate for exponential growth, and I think it'll continue into 2026.

MCP (Model Context Protocol) has been huge for extending agents into different applications. People have integrated Cursor with things like Blender and other tools you wouldn't expect. Models have gained significant spatial awareness, making them better at 3D applications. And the visual capabilities are frankly superhuman now with things like Gemini 3.

Computer Use: Still Experimental, But Real

I predicted that full computer use would remain experimental while sandboxed tools would handle more of our daily work. Pretty spot on.

We now have Claude for Chrome (Anthropic's browser extension, public beta December 2025), Comet Browser from Perplexity (launched July 2025, went free in October), and Atlas from OpenAI (their sandboxed browser agent, October 2025, macOS only initially).

These tools exist and work, but they're still shaky. The models have to process frames and deal with latency, so their intelligence feels limited compared to what I'm used to in a chat interface. I don't trust Atlas to do anything mission-critical yet because its underlying model isn't as capable as the ones I use for text. But the foundation is there.

Research Tools: A Major Hit

I said tools like Google's Gemini Deep Research could revolutionize data analysis. When I wrote that prediction, Deep Research was brand new. It turned out to be one of the defining products of the year.

Every big lab shipped their own research offering. OpenAI's Deep Research launched in February 2025, powered by dedicated models (officially o3-deep-research and o4-mini-deep-research in their API).

Fact Box: Deep Research Models

OpenAI's Deep Research runs on purpose-built models: o3-deep-research and o4-mini-deep-research. In 2025, they also integrated research capabilities into ChatGPT's agent mode with a visual browser.

Source: OpenAI Platform Docs, OpenAI Blog

Long-form agent-type research was something I used to do manually—spending hours reading different sources to gather information. Getting a leg up on that has been transformative. The accuracy has improved tremendously too. At the start of the year, these tools delivered hazier, less reliable information. By the end of 2025, people trust this stuff a lot more.

An interesting side effect: people have become much more aware of the delta between human and machine errors. The kinds of research mistakes AI makes—bad sourcing, trusting unreliable information—are the same mistakes humans make. Becoming aware of that fact is actually the key to solving the problem.

The public is getting better at critical thinking here, even while complaining about not being able to believe anything anymore. I hear regular non-technical folk talking about sourcing and bias now. That feels significant.

For 2026, I'd love to see upgrades to OpenAI's Deep Research. We're still on the first version. A GPT-5-based model would be great, though the latency might be problematic. Maybe a 5.5-type iteration. I also expect to see a more unified agent mode architecture—the current one feels like a combination of O3 and Operator stitched together rather than something purpose-built.

Generative AI: Fooling Everyone

I predicted some folks wouldn't be able to tell what's fake, and some would think everything is fake. Both turned out absolutely true.

By the end of 2025, we'd reached the point where studies show AI audio cannot be distinguished by average listeners. Photography can fool most people. Video with Sora has reached unprecedented levels of realism.

Fact Box: AI Detection Research

Queen Mary University of London reported that AI-generated singing voices were indistinguishable from human singers in controlled listening tests. A Deezer/Ipsos survey found 97% of 9,000 respondents couldn't reliably distinguish AI-generated music from human-created tracks.

Sources: Tech Xplore, TechRadar

I've been fooled myself this year, which I found interesting. Not for long—usually catch it after a few seconds—but there have been a couple of times. One viral example was an AI-generated "NYPD vs ICE agent" confrontation video that spread quickly on social media before fact-checkers identified it as Sora-generated. It gained traction partly because it felt weirdly prescient, but the visuals were also just spot on.

Fact Box: Viral AI-Generated ICE Videos

Multiple AI-generated ICE-related videos went viral in 2025. AFP Fact Check confirmed videos depicting NYPD officers confronting ICE agents were AI-generated using Sora. Separately, ICE itself released an AI-generated Santa Claus video promoting self-deportation, which was widely criticized.

The good news: studies this year showed we're actually quite good at learning to detect "model fingerprints." It must come from our human architecture—like how we can pick up on a person's gait, we can learn to recognize a model's personality. That said, you don't want to be the person walking around confidently saying "I can tell this is AI-generated," because you probably can't reliably. I say that to myself.

There are tells, though. The yellow filter on early OpenAI images. The grainy distortion pattern on version 1.5. When it comes to LLM-generated UI design, they've all converged on this glassmorphic purple gradient Tailwind aesthetic that's instantly recognizable. Not surprising—if you don't specify anything, you get the average, and these are the most common designs in the training data.

What's fascinating is how quickly frontier capabilities became prevalent. That underlines just how much these tools are being used. Ultimately a good thing. People probably shouldn't be so dismissive about calling out "AI-generated" content—we all know, and you're not informing anyone of anything new.

Image Generation: Correction on Naming

I predicted big updates to visual AI with "DALL-E 4." I was right about the impact but wrong about the name. OpenAI didn't release DALL-E 4—they moved to a new naming convention. The model is called GPT Image, released March 25, 2025. DALL-E 2 and 3 are being deprecated (removal scheduled May 2026).

Fact Box: OpenAI Image Model Naming

There is no "DALL-E 4." OpenAI's current image generation model is GPT Image (with versions 1 and 1.5). The DALL-E brand is being retired.

Source: OpenAI Platform Documentation, Wikipedia

On the Google side, they released powerful image capabilities through their Gemini models. The diagram and infographic generation has been particularly impressive—I've seen it transforming workflows in YouTube videos, presentations, architectural diagrams, and even pull requests. I've used it personally to turn complex information into flowcharts for better understanding.

Sora 2 launched September 30, 2025 with synchronized audio generation (dialogue, sound effects, ambient noise), improved physics, and a "Cameos" feature for inserting yourself into videos. Disney invested $1 billion in OpenAI in December 2025 specifically for character generation capabilities.

3D Worlds and World Models: Progress Is Real

I mentioned World Labs' work—Fei-Fei Li's company launched Marble in November 2025, their first commercial generative world model for creating persistent, downloadable 3D environments from text and images. Google DeepMind released Genie 2, creating real-time interactive 3D environments at 720p/24fps (though still in limited research preview).

Fact Box: World Models in 2025

- Marble (World Labs): First commercial generative world model, $20-35/month

- Genie 2 (DeepMind): Foundation world model for interactive environments, 720p/24fps

Sources: TechCrunch, Time

The end game I'm imagining: a world model that acts as an assistant, with an LLM inside it that can carry out tasks and simulate anything on screen. If we can solve the memory problem, you could have continuous assistants, continuous video feeds, continuous generative games. This might require hybrid technology—digital systems alongside neural pathway scaling—but it feels more possible than ever.

Audio and Music: GAWs Are Real

I predicted Generative Audio Workstations would emerge. Suno delivered with Suno Studio in September 2025—a browser-based music editor and creation flow. It's basic and early, with issues around essentially cloning/splitting tracks, but it's a start. They're generating all this data, which will enable better stem production and mixing in the future.

Video editors have incorporated AI tools. There's a whole ecosystem of video startups making genuinely impressive tools. An individual can now produce shorts that are higher quality than some big productions. Let's be frank about that.

Fact Box: AI Music Label Deals

The major labels pivoted from fighting to partnering in 2025:

- Udio + Universal Music: Settlement October 2025

- Udio + Warner Music: Settlement November 2025

- Suno + Warner Music: "Landmark" settlement November 25, 2025; $250M Series C at $2.45B valuation

- Sony: Still in litigation

Sources: Rolling Stone, Hollywood Reporter, AP News

At the end of 2025, we had to reckon with the fact that game developers use generative AI and must do so to stay competitive. Some "shame casting" happened toward developers of games like Arc Raiders and Clair Obscur: Expedition 33 for using generative AI. But Expedition 33 won Game of the Year at The Game Awards 2025—you could argue an indie studio doing that well is because of generative AI, not in spite of it.

Fact Box: Game AI Controversies

- Arc Raiders: Embark Studios uses AI text-to-speech for NPC voices; voice actors are compensated

- Clair Obscur: Expedition 33: Won GOTY at The Game Awards 2025 but had Indie Game Awards rescinded due to AI placeholder textures that "slipped through" to release

Sources: GamesRadar+, Dexerto

The anti-AI art movement is becoming problematic in many ways. I was an artist myself for years, and I still produce art. I believe in expression—for everyone. The arguments remind me of Photoshop debates from years ago, and analog-versus-digital debates before that. They'll pass. I'd encourage people to be kind about it and give folks an off-ramp from positions they've hitched their wagon to.

AI Music Stats: A Correction

I mentioned that "30% of what music listeners do is AI now." That's not quite right. The actual statistic: 34% of new music uploaded to Deezer is AI-generated. However, AI music accounts for only ~0.5% of total streams. Big difference between upload volume and actual consumption.

Fact Box: AI Music by the Numbers

- 34% of daily new uploads to Deezer are fully AI-generated (~50,000+ tracks/day)

- ~0.5% of total streams are AI-generated tracks

- 97% of listeners can't reliably distinguish AI music in surveys

- Breaking Rust's "Walk My Walk" hit #1 on Billboard Country Digital Song Sales (November 2025)—first AI song to top a Billboard chart

Large Language Models: The Slow and Steady March

I predicted the reasoning crank would turn a few times, we'd get sharper reasoning and better performance, faster models, longer contexts (500k to 1M+ tokens), and lower costs. Mostly right.

The reasoning improvements were real. Some models got faster, but GPT-5 ended up being a lot slower than I expected. Context windows around 500k happened. Lower costs came through for equivalent-performance models, but the reasoning models cost substantially more.

I said shrinking models would get scarily close to their beefier counterparts. Gemini 3 Flash versus Pro proved that—very close performance. Bigger models maxed out benchmarks, making it genuinely hard to figure out what's "good enough." People at the end of 2025 still debate whether Opus 4.5, GPT-5.2, or Gemini 3 Pro is "best." It's debatable because personalities have changed so much, and people have their own preferences.

Vendor Predictions: OpenAI

I predicted O3-mini and O3 would hit in Q1 and later 2025. That was accurate—O3-mini launched January 31, 2025, and O3 released April 16, 2025.

I speculated about whether O3 was based on a new training run or fine-tuning on GPT-4. I need to correct myself here: O3 is not fine-tuned on GPT-4. It's a purpose-built reasoning model using reinforcement learning, evolved from the o1 architecture. The o-series and GPT-series are parallel but distinct model families.

Fact Box: OpenAI 2025 Model Timeline

- O3-mini: January 31, 2025

- O3: April 16, 2025

- GPT-5: August 7, 2025 (first "unified" model, 400K context window)

- GPT-5.1: November 12, 2025

- GPT-5.2: December 11, 2025

Sources: Wikipedia, TechCrunch, OpenAI

I predicted OpenAI would launch an agent marketplace—they did (the GPT Store evolved). I predicted an OpenAI browser forked from Chromium—Atlas happened, though I'm not sure about the Chromium part.

The "vibe check" mattered as much as I thought it would. GPT-5's early reception was "impressive but not as large a gain as from GPT-3 to GPT-4." Latency, coding efficiency, and reasoning abilities determined how people felt about it more than raw benchmarks.

Vendor Predictions: Google

I said Google would push affordable, polished models. Gemini 3 delivered—Gemini 3 Pro released November 18, 2025 in preview, hitting 1501 Elo on LMArena and 91.9% on GPQA Diamond. Gemini 3 Flash followed December 17, 2025.

I predicted Gemma 3 would dominate lightweight use cases. Gemma 3 did release March 12, 2025 with sizes up to 27B parameters—it was impressive. However, I was wrong about Gemma 4: it hasn't been released yet as of January 2026.

Fact Box: Gemini 3 Pre-training vs Post-training

I'd heard claims that Gemini 3's gains came primarily from pre-training. That's not quite accurate. Google DeepMind VP Oriol Vinyals stated improvements came from both: "Pre-training: the delta between 2.5 and 3.0 is as big as we've ever seen... Post-training: Still a total greenfield."

Source: OfficeChai, Tomasz Tunguz

NotebookLM got deeper voice integration, podcasting innovations, and research tools—the Gemini 3 treatment I predicted.

Vendor Predictions: Anthropic

When I wrote my 2025 predictions, Opus 3.5 still hadn't released. It eventually came as Claude Opus 4.5, launching November 24, 2025. It hit 80.9% on SWE-bench Verified—state-of-the-art for coding at release. Anthropic claims it outperformed any human candidate on their internal performance engineering exam.

Fact Box: Anthropic 2025 Releases

- Claude Sonnet 4.5: Late September 2025

- Claude Haiku 4.5: October 15, 2025 (matched Sonnet 4's performance at 1/3 the cost; first Haiku with extended thinking and computer use)

- Claude Opus 4.5: November 24, 2025

They didn't get true multimodality—images but no voice, and they don't generate images. No great voice offering from Anthropic; Google's is still better. They still don't have the duplex-style "talk at the same time" true voice I'm looking for.

MCP got huge as a standard. Anthropic announced they were donating it and establishing the Agentic AI Foundation. OpenAI's Apps platform (Apps SDK) explicitly frames apps as an MCP server plus a conversational interface—which validates the "tooling standards" narrative I predicted.

The small models were a big thing this year. Haiku 4.5 was really great—cheaper, more useful, and good at tool calling. That's huge for building reliable agent systems.

Vendor Predictions: Meta

This was probably my biggest miss. I predicted Llama 4 would beat top-scoring open-source models. What actually happened was a controversy.

Llama 4 released April 5, 2025 (Scout and Maverick variants), but the rollout faced significant backlash. Meta submitted an "experimental" version optimized for benchmarks to LMArena, drawing public criticism. A coding benchmark showed ~16% on Aider Polyglot, and users reported poor real-world performance at long contexts. "Disaster" might be strong, but "scandal" appeared in multiple headlines.

Fact Box: Llama 4 Controversy

Meta was accused of submitting a benchmark-optimized "experimental" version of Llama 4 to LMArena that differed from the publicly released model. Real-world performance disappointed users, particularly at long contexts.

Sources: TechCrunch, The Register, Composio

I was right about the Meta Ray-Bans. The Meta Ray-Ban Display glasses launched September 30, 2025 for $799, bundled with the Meta Neural Band—an EMG wristband that detects muscle signals for gesture control. First Meta smart glasses with an in-lens display (600×600 pixels, 90Hz). Nothing from Quest 4 though.

VR and the metaverse got even more derided this year, but I still think virtual reality and augmented reality are the final interfaces for a lot of things. Meta will ultimately reap dividends from their spatial stack investment, as will Apple when they eventually ship glasses.

I mentioned third-party headsets running Horizon OS—the Quest 3S Xbox Edition did release June 24, 2025, but it's really a Meta-manufactured device with Xbox styling rather than a truly Microsoft-developed headset. More significantly, Meta paused the entire third-party program in December 2025. The announced Asus and Lenovo headsets never shipped.

Vendor Predictions: NVIDIA

RTX 50 series happened. There were Witcher 4 previews at CES, though no Cyberpunk 2 teasers. The RTX 5090 does have 32GB of GDDR7 as I predicted.

I mentioned "Groq acquisition"—I need to correct that. NVIDIA didn't acquire Groq. It was a $20 billion licensing deal (December 24, 2025) for Groq's LPU technology and patent portfolio plus a talent acquisition (CEO Jonathan Ross and key employees joined NVIDIA). GroqCloud continues operating independently. Strategically meaningful, but not an acquisition.

Fact Box: NVIDIA-Groq Deal

NVIDIA paid approximately $20 billion on December 24, 2025 for a licensing agreement covering Groq's LPU inference technology and patent portfolio, plus talent acquisition. This was not an acquisition—Groq remains independent and GroqCloud continues operating.

Sources: Yahoo Finance, Medium

That Groq acceleration is really nice. If NVIDIA starts offering that speed as a surface in data centers, we're on to something. Imagine ChatGPT running that fast.

US-China AI Rivalry

I mentioned GPU supply chains and strategic open-sourcing. The rivalry has been exciting—China came essentially toe-to-toe with big frontier labs that aren't open source and aren't cheap. Competition is good.

Trump's deal allowing NVIDIA to sell GPUs to China (specifically H200 chips tied to broader trade talks) was a change in policy. Reuters reported on this.

Open-Weight Local Models

I predicted laptops would run models powerful enough to replace some top-tier offerings. That happened. By the end of the year, we got models like GLM 4.6 (Zhipu), MiniMax M2, and NVIDIA's Nemotron line (including Nemotron 3 Nano, which can run locally on RTX 3090/4090 with 24GB VRAM using 4-bit quantization).

Fact Box: Local Model Requirements

Nemotron 3 Nano (30B total / 3.2B active parameters) runs on a single RTX 4090 with 24GB VRAM using 4-bit quantization.

Source: GameGPU

Quick Notes: Audio Transcription and Translation

I have to give a shout-out to audio transcription models and the companies focused on text-to-speech and speech-to-text. The innovation has been great.

Sesame's Conversational Speech Model launched February 2025, explicitly aimed at "crossing the uncanny valley of conversational voice." ElevenLabs raised $180 million in January 2025 at a $3.3 billion valuation and launched Eleven Music in August with licensing from Merlin Network and Kobalt.

Fact Box: Sesame AI Voice Model

Sesame's CSM model aims to solve uncanny valley issues through contextual awareness and emotional intelligence. The 1B parameter variant was open-sourced March 13, 2025. Research titled "Crossing the uncanny valley of conversational voice."

Sources: Sesame Research, R&D World

Wispr Flow has been useful this year, even though the subscription pricing is annoying. It works well—which is why I subscribed, especially because I had a trapped nerve that made it impossible to type for the last part of the year.

Remarkably, that was one of my most productive periods despite the injury. I was blown away that I could do everything without being able to use my right hand (I can only use it very limited right now). That's been really cool.

The prevalence of this in translation is fascinating. So many young people in other countries are realizing they probably don't need to learn another language because we're no longer gated by communication. You could keep your native tongue culturally intact and not have to assimilate into a cultural homogeny if you can do natural translation.

I also think about growing up where I had to learn cursive for 10 years—I thought maybe it would go out because computers were coming. The debates I had back then are the same debates happening today. We used to write in cuneiform, doing little strikes on rocks and sticks. I'm sure some people were upset about changing that too.

Part Two: My 2026 Predictions

The Big Picture

2026 is going to be another year of continued gains—probably linear rather than exponential breakthroughs. But we'll see bumps and innovation in different spaces.

Models will get bigger, better, and faster. No doubt about that. By the end of 2026, we'll start to see some new data centers come online, enabling bigger training runs. But the full scaling gains won't pan out until 2028.

I expect OpenAI to come with some sort of 5.5 model that's significantly faster, presumably on a bigger training run. They could save that for a "GPT-6" if the industry starts moving faster. But I think the GPT-5 naming was a problem—the last frontier model wasn't that long before, so calling this one "5" with big expectations attached was psychologically complicated.

First impressions last. If you have a bad experience your first time with a model, it sticks with you.

Google, Anthropic, and the Model Race

I expect Google's final Gemini 3 Pro release to be a significant boost. They'll get innovations from the Flash release that engineers noted didn't make it into Pro in time.

Anthropic will continue bringing out amazing models without fanfare. They're a bit behind on the product curve and I don't think that'll change, but they'll focus on the business/enterprise side and maintain their lead through first-mover advantage and name recognition in that space.

Interesting opportunity: a business offering with Gemini 3 Flash unlimited. You could price that well and a lot of people would go for it. A truly good unlimited frontier-speed offering would be incredible for 2026.

What I'm realizing about Gemini 3 Flash specifically: the model suddenly got good enough to be both fun and smart at the same time. Smart and fast together—that's a next-gen experience. Frontier intelligence at low cost and high speed is where we want to be by the end of 2026.

The Developer Experience

At some point in 2026, for all intents and purposes, the kind of work I do—web development, even advanced enterprise software development—will be doable by these models.

But that doesn't mean a regular person can just generate an app. Roles exist for a reason. Specialization matters. Experience is a multiplier. Whatever experience you have in a field, even if you gained it using models, is valuable.

I can imagine future "vibe coders" with no traditional programming background but enough experience from building apps through AI-assisted development that they can get good work done. We're competing with people who have the same technology. The question is how much better you use it than they do. And god help the people who aren't using the technology at all.

Meta's Potential Surprise

Meta might be a big surprise in 2026. Long bet, but hear me out.

They've paused, but their superintelligence team is apparently working on a new frontier-level model with omni capabilities (images, video, sound). Given that they just purchased Manus (the AI agent startup) for over $2 billion in late December 2025, that acquisition could be huge if they integrate everything together.

Fact Box: Meta Acquires Manus

Meta closed the Manus acquisition December 29-30, 2025 for over $2 billion, negotiated in approximately 10 days. Manus, a Singapore-based AI agent startup with $100M+ annualized revenue, will continue operating its subscription service while employees join Meta.

If you integrate the glasses with the model and get a really nice voice model in there, you could have a phenomenal experience. The glasses aren't good enough right now for that, but the potential is obvious.

Of course, Apple will likely come out with glasses at some point. Maybe a preview in 2026. Rumors suggest Apple's been talking with Google about a Gemini model for Siri—a practical catch-up move while they build internally.

Fact Box: Apple + Gemini for Siri

Bloomberg reported Apple is paying approximately $1 billion per year for a custom 1.2 trillion parameter Gemini model for a Siri overhaul. Expected rollout: Spring 2026 with iOS 26.4.

When you get that integration, you can imagine voice-driven devices becoming the norm.

OpenAI Hardware

The OpenAI + Jony Ive device is hotly tipped. OpenAI acquired Jony Ive's startup "io" for $6.4 billion in May 2025. First prototypes were confirmed November 2025, expected "in under two years."

Some leaks suggest one form factor is a pen. If it had a 360 camera, handwriting recognition, and the nice new voice architecture, it could be exciting—especially if there's a range of products.

Fact Box: OpenAI Hardware Status

- May 2025: OpenAI acquired Jony Ive's "io" startup for $6.4 billion

- November 2025: First prototypes confirmed

- December 2025 leaks: "Gumdrop" codename; pen form factor rumored but not officially confirmed

- Court filings confirm: device won't be a wearable or earbuds; won't launch before 2026

Sources: CNBC, Built In, Dataconomy

The idea of something you have at all times that can listen in is interesting. It dawned on me this year: if I use Atlas all day at work and have some voice device recording my interactions, a model could have all the same context I have from my 9-to-5. That's a fascinating notion—you could share that context with colleagues in a company setting.

Memory: The Big 2026 Unlock

Memory is probably going to be the biggest unlock of 2026.

The labs are always talking about new research, and hints suggest we're going to get some sort of "perfect memory" system. They're trying to solve the problem properly—done once, so we don't have to worry about it anymore.

Memory is the big problem right now. LLMs still forget everything when you start a new chat. If we could get long-term agents with persistent identities, that could be transformative.

I can envision adapter files saved with your work, applied to any model that supports them. Essentially an identity linked to a model. Matrix addition—you add them together like a LoRA adapter and get something coherent.

This becomes another scaling axis: how much memory something can have becomes a number on a chart, something you can charge for. An adapter with hours of training on a codebase is presumably valuable. There'll be infrastructure built around maintaining these identities.

You might want an adapter to remember things on one project but not another. We're experimenting with RAG-type memory systems in ChatGPT now, but they're janky. I don't like how memories leak into other conversations. And I don't like text tokens being involved since they can be hijacked—I don't want a colleague introducing a single token that affects my entire developer experience.

NVIDIA and Speed

If NVIDIA starts offering Groq-level acceleration as a service in data centers, we're on to something. If ChatGPT was that fast by the end of 2026, things will be moving very fast indeed.

It's not so much that the systems are "self-improving" in an AGI sense. GPT-5 will continue to solve more math problems. But the more important point: if we're all using these tools, we're all benefiting at the same time.

At the start of the year, there was debate about whether these tools were actually productive. By the end of 2025, most boardrooms that invested properly should have graphs showing productivity gains. Jira tickets going up. Velocity going up. It's undeniable.

That graph should continue upward. The problem is developers will be expected to produce more. Expectations are hard to manage when people think you can knock out 10,000 lines in a day. The cognitive debt is actually quite high, and it puts pressure on developers.

Then again, developers were always under pressure. I told my colleagues years ago that the big surprise working with AI coding has been that the job hasn't changed that much. You're solving problems with technology. The technology was always obfuscated from us in some way, and it will continue to be.

It's never been a better time to be a developer. You can get so much done. The success of teams like Expedition 33 underlines my theory: companies will be smaller. We'll have smaller groups making great products. Household names made by smaller teams. I don't think we've fully internalized that yet.

Frontier Capabilities I Want to See

I'd like to see a video assistant with an expressive face. That would be exciting.

I'd like at least a demo of some virtual reality generative experience. Meta should release their codec avatars publicly. If they could get the avatars into the glasses—that's really slick. They presumably have beta products since they've been working on this for years. Sora 2 has embeddings that could enable something similar.

It's interesting how companies have to release things before the technology goes obsolete. I think about GTA 6—it'll eventually release, but it's fascinating that we got robust AI before Grand Theft Auto 6.

Music in 2026

The big labels went with the technology instead of fighting it (unlike Napster). Suno and Udio have signed deals with major labels. I don't love that you can't download your own work in some tiers, but if it's easy and we can remix stuff from big artists, that could be exciting for social networks.

Despite what people say, analytics show most people use AI art for personal reasons. Complaining about it on the internet doesn't make sense. One of the great joys of art is expression for personal satisfaction. If people want to write little songs for themselves, that's great.

The economy around this does need adjusting. It's not right that talented musicians don't make anything while people with money can be successful. Video models are important here—it's one of the few creative tools that even middle-class people don't have easy access to without AI.

I also think Sora's approach is fundamentally different—people are creating and sharing things rather than having arguments. That's a more beautiful world, despite what critics say.

For scaling: if we can do 4K video now, expect 8K next year and longer durations. 4K and 1080p going longer. Better quality, more fidelity, more embeddings, longer audio models.

What I Don't Expect

I don't foresee huge revolutionary breakthroughs on the horizon. Memory might be the biggest one. But there could always be architectural surprises. With the amount of investment and research going into this space, 2026 will be absolutely huge—bigger than 2025, for sure.

Thanks for reading. Let's see how these predictions hold up.